Download Virtual Memory, Lecture Slide - Computer Science and more Slides Introduction to Computers in PDF only on Docsity!

Virtual Memory

October 27, 1998

Topics

- Motivation

- Address Translation

- Accelerating with TLBs

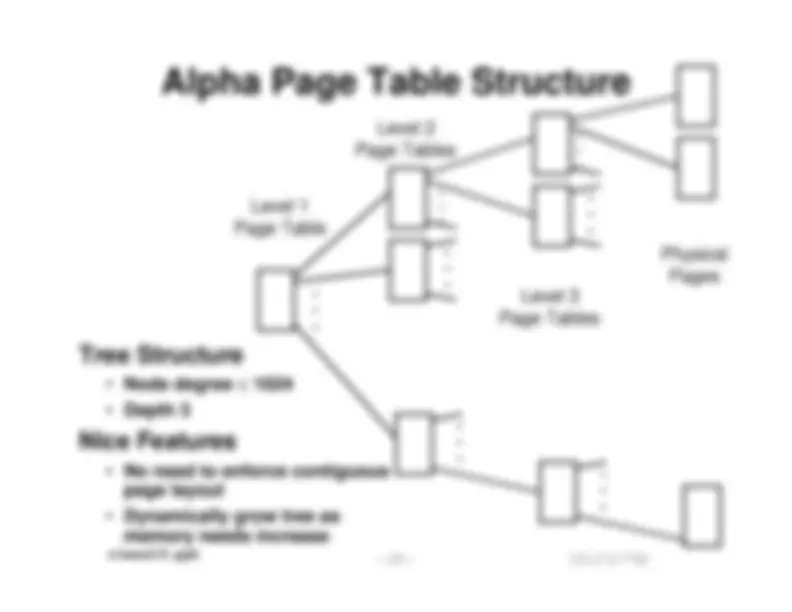

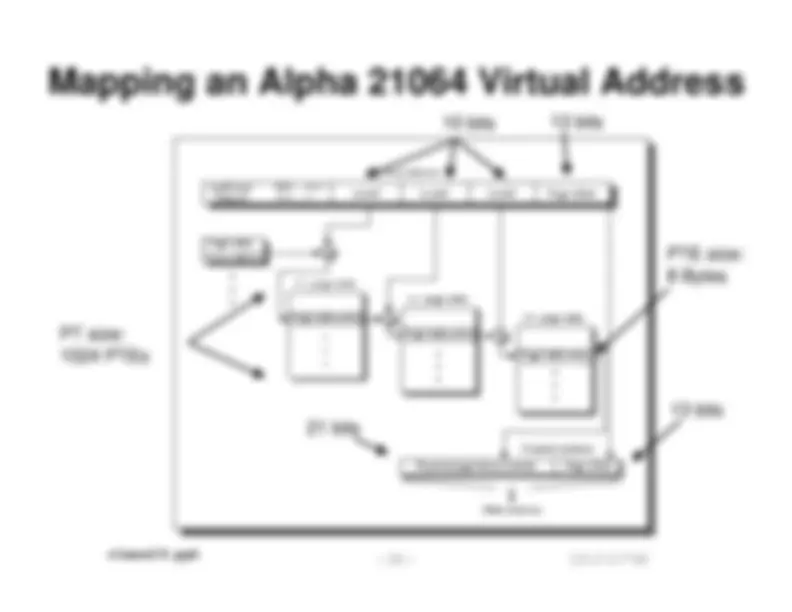

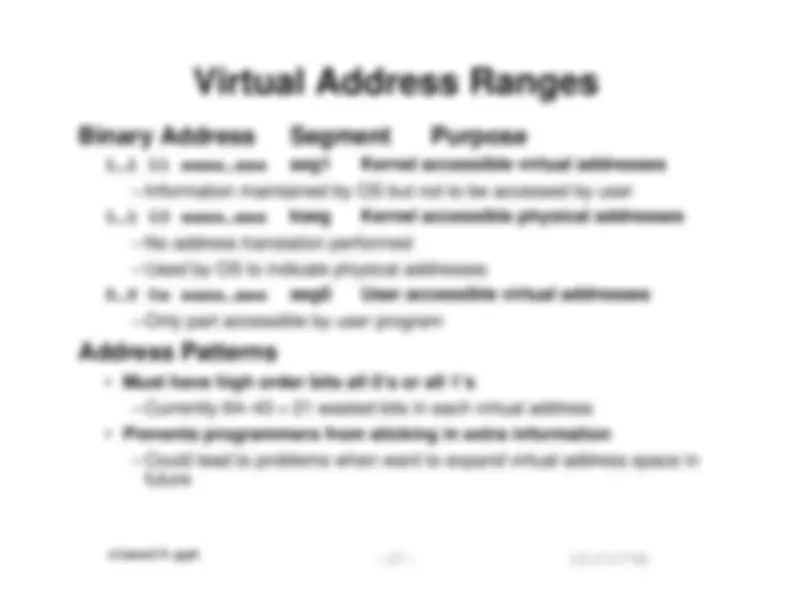

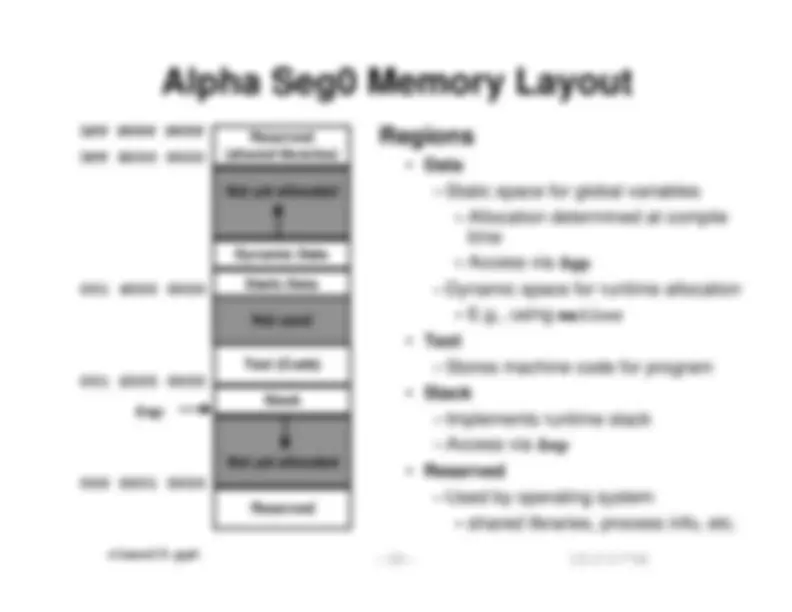

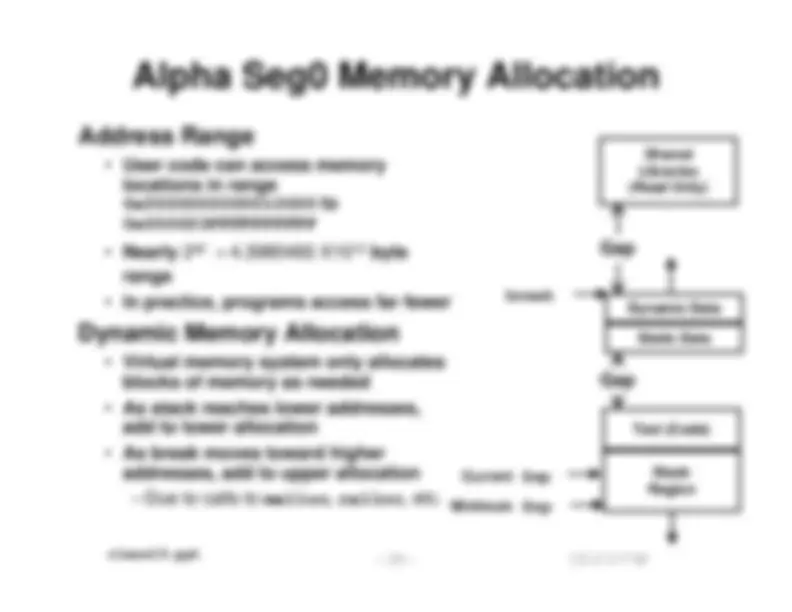

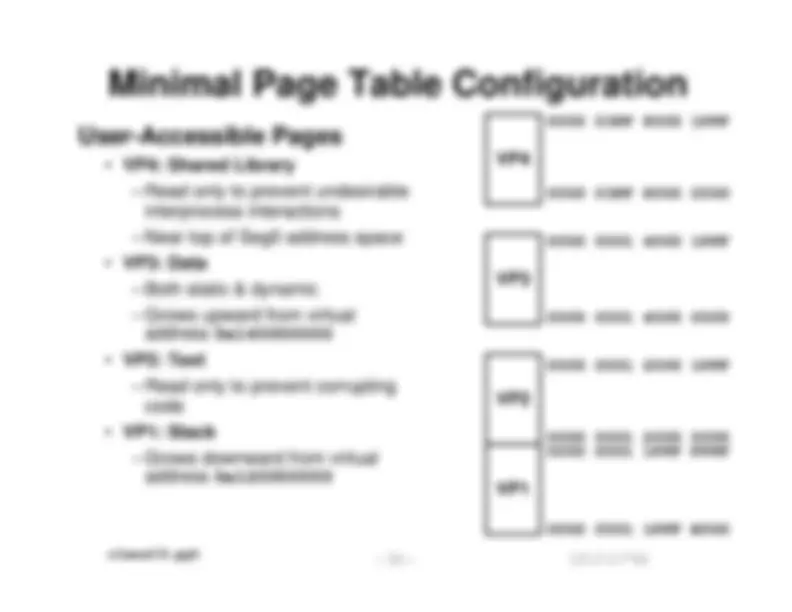

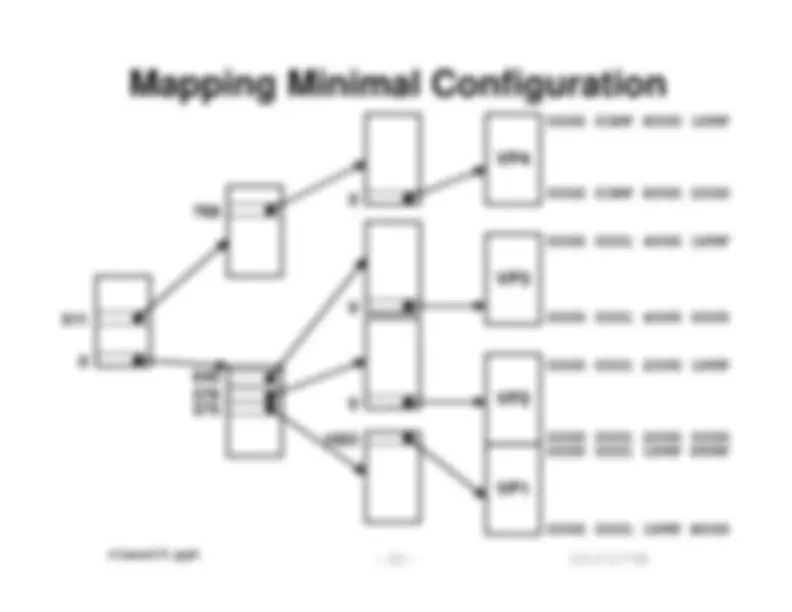

- Alpha 21X64 memory system

Levels in Memory Hierarchy

CPU CPU

regsregs

C a c h e

Memor Memor yy (^) diskdisk

size: speed: $/Mbyte: block size:

200 B

3 ns

4 B

Register Cache Memory Disk Memory 32 KB / 4MB 6 ns $100/MB 8 B

128 MB

100 ns $1.50/MB 4 KB

20 GB

10 ms $0.06/MB

larger, slower, cheaper

4 B 8 B 4 KB

cache virtual memory

Process 1:

Virtual addresses (VA)

Physical addresses (PA)

VP 1 VP 2

Process 2:

PP

0 address translation

0

N-

0

N- M-

VP 1 VP 2

PP

PP

(Read-only library code)

Address Spaces

- Virtual and physical address spaces divided into equal-sized blocks

- “Pages” (both virtual and physical)

- Virtual address space typically larger than physical

- Each process has separate virtual address space

Other Motivations

Simplifies memory management

- Main reason today

- Can have multiple processes resident in physical memory

- Their program addresses mapped dynamically

- Address 0x100 for process P1 doesn’t collide with address 0x100 for process P

- Allocate more memory to process as its needs grow

Provides Protection

- One process can’t interfere with another

- Since operate in different address spaces

- Process cannot access privileged information

- Different sections of address space have different access permissions

Macintosh Memory Management

Allocation / Deallocation

- Similar to free-list management of malloc/free

Compaction

- Can move any object and just update the (unique) pointer in pointer table

“Handles”

P1 Pointer Table

P2 Pointer Table

Process P

Process P

Shared Address Space

A

B

C

D

E

Macintosh vs. VM-based Mem. Mgmt

Both

- Can allocate, deallocate, and move memory blocks

Macintosh

- Block is variable-sized

- May be very large or very small

- Requires contiguous allocation

- No protection

- “Wild write” by one process can corrupt another’s data

VM-Based

- Block is fixed size

- Single page

- Can map contiguous range of virtual addresses to disjoint ranges of physical addresses

- Provides protection

- Between processes

- So that process cannot corrupt OS information

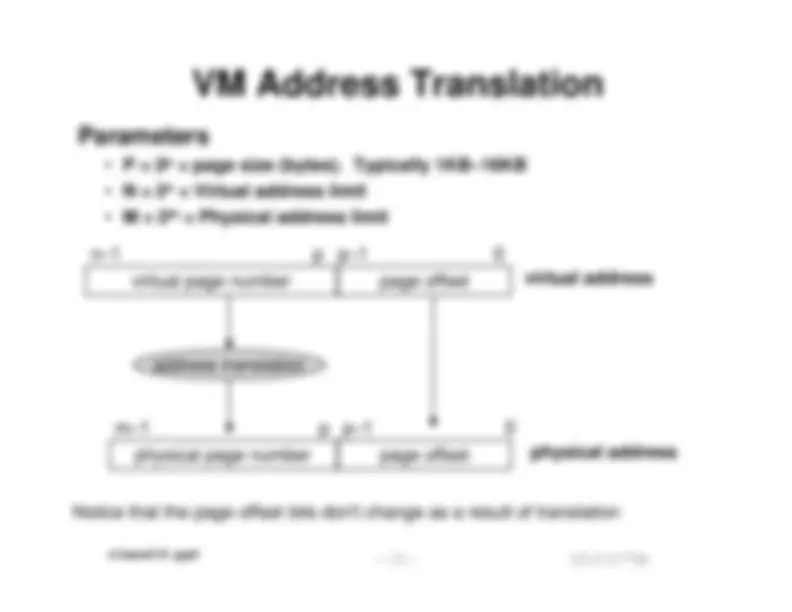

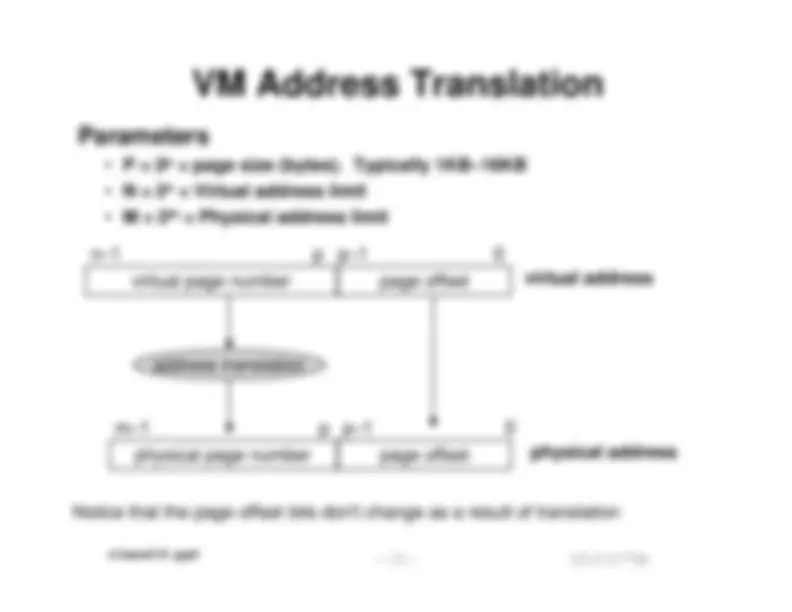

virtual page number page offset virtual address

physical page number page offset physical address

p–1 0

address translation

m–1 p

n–1 p p–1 0

Notice that the page offset bits don't change as a result of translation

VM Address Translation

Parameters

- P = 2p^ = page size (bytes). Typically 1KB–16KB

- N = 2n^ = Virtual address limit

- M = 2m^ = Physical address limit

Page Tables

Page Table Operation

Translation

- Separate (set of) page table(s) per process

- VPN forms index into page table

Computing Physical Address

- Page Table Entry (PTE) provides information about page

- Valid bit = 1 ==> page in memory. » Use physical page number (PPN) to construct address

- Valid bit = 0 ==> page in secondary memory » Page fault » Must load into main memory before continuing

Checking Protection

- Access rights field indicate allowable access

- E.g., read-only, read-write, execute-only

- Typically support multiple protection modes (e.g., kernel vs. user)

- Protection violation fault if don’t have necessary permission

VM design issues

Everything Driven by Enormous Cost of Misses:

- Hundreds of thousands to millions of clocks.

- vs units or tens of clocks for cache misses.

- Disks are high latency

- Typically 10 ms access time

- Moderate disk to memory bandwidth

- 10 MBytes/sec transfer rate

Large Block Sizes:

- Typically 1KB–16 KB

- Amortize high access time

- Reduce miss rate by exploiting spatial locality

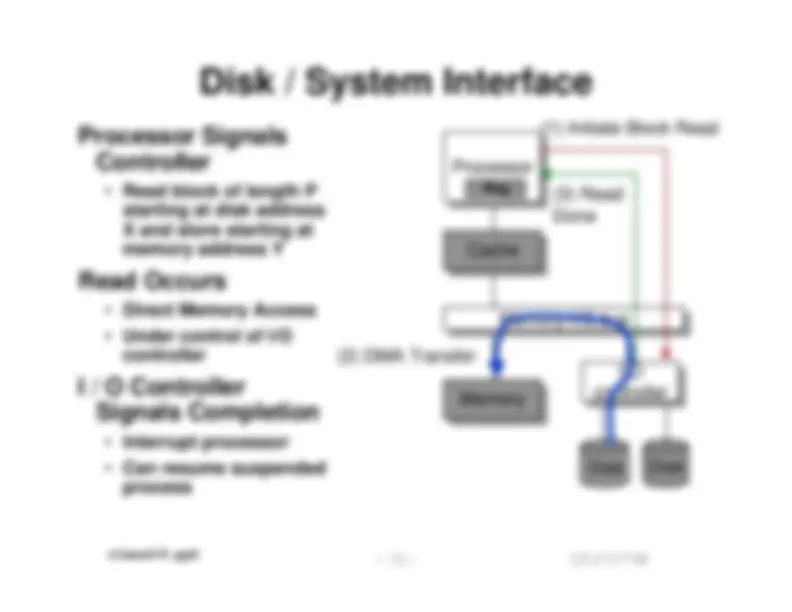

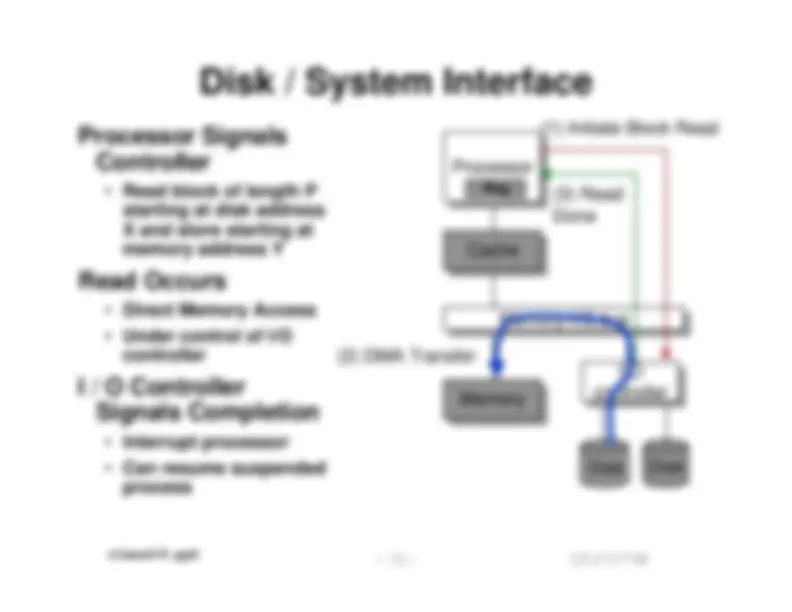

Perform Context Switch While Waiting

- Memory filled from disk by direct memory access

- Meanwhile, processor can be executing other processes

VM design issues (cont)

Fully Associative Page Placement

- Eliminates conflict misses

- Every miss is a killer, so worth the lower hit time

Use Smart Replacement Algorithms

- Handle misses in software

- Plenty of time to get job done

- Vs. caching where time is critical

- Miss penalty is so high anyway, no reason to handle in hardware

- Dmall improvements pay big dividends

Write Back Only

- Disk access too slow to afford write through

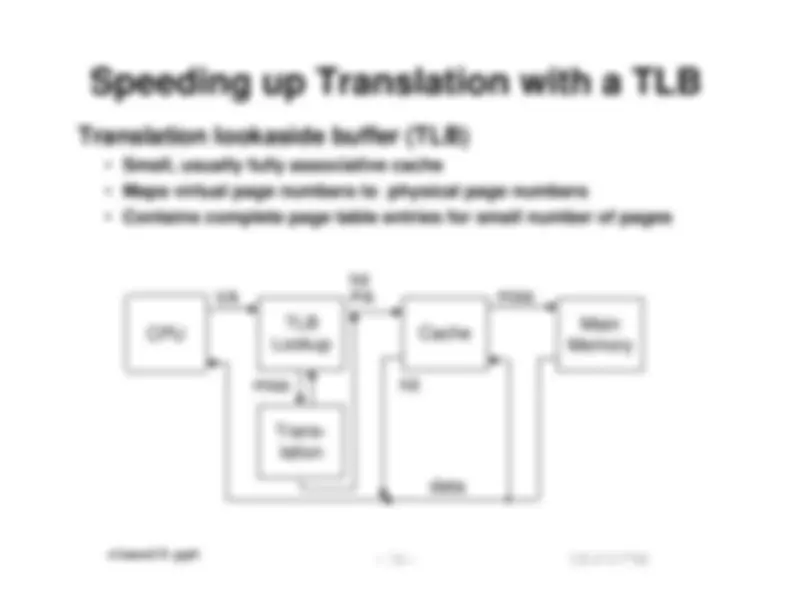

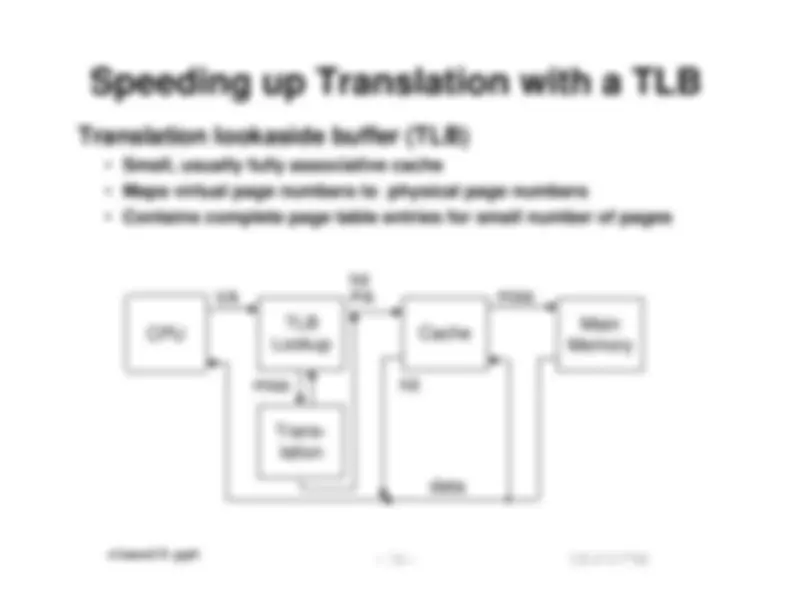

CPU

Trans- lation Cache Main Memory

VA PA miss

hit data

Integrating VM and cache

Most Caches “Physically Addressed”

- Accessed by physical addresses

- Allows multiple processes to have blocks in cache at same time

- Allows multiple processes to share pages

- Cache doesn’t need to be concerned with protection issues

- Access rights checked as part of address translation

Perform Address Translation Before Cache Lookup

- But this could involve a memory access itself

- Of course, page table entries can also become cached

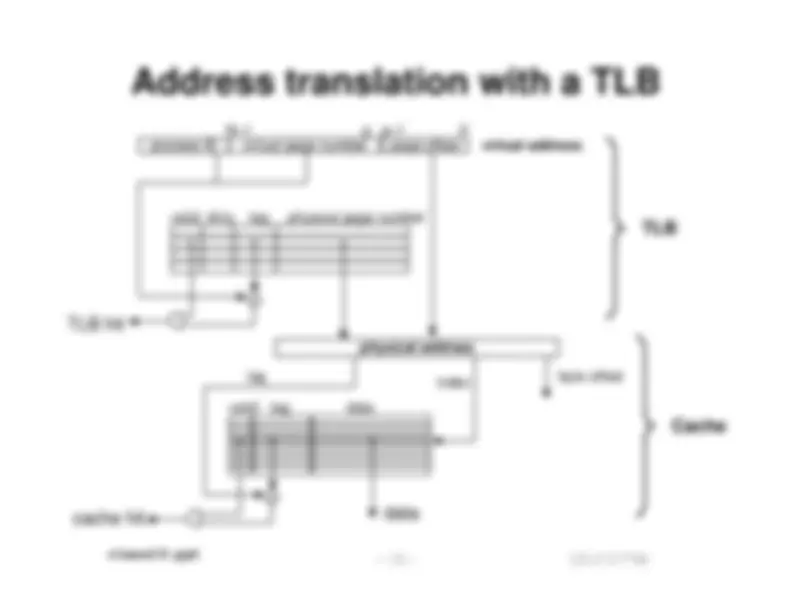

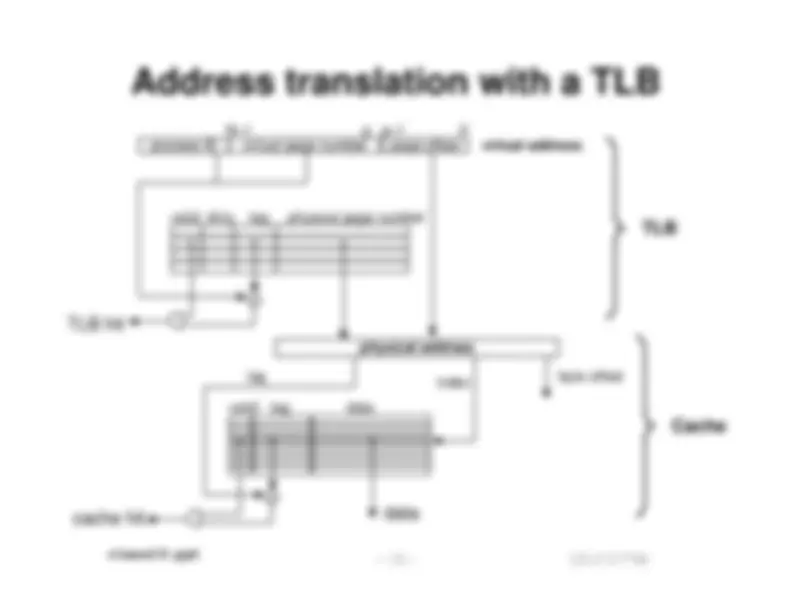

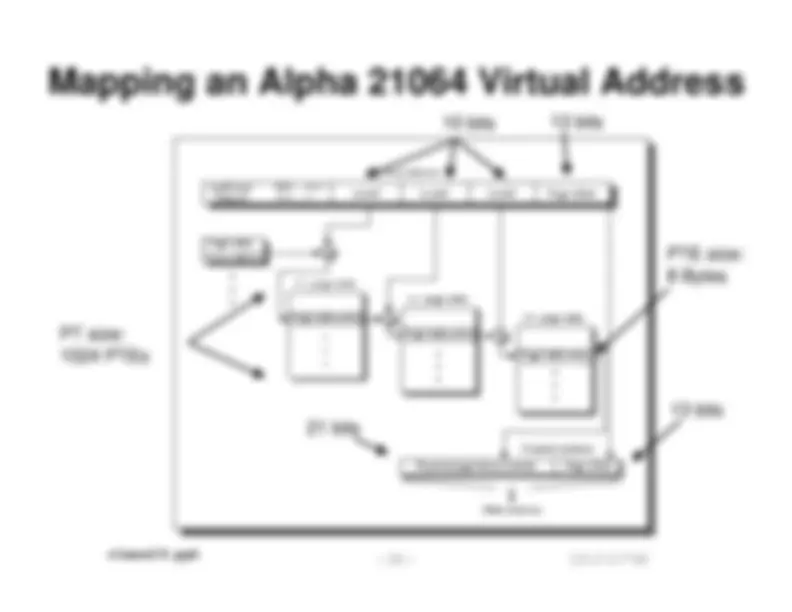

Address translation with a TLB

virtual page number page offset^ virtual address

physical address

N–1 p p–1 0

valid tag physical page number valid

dirty valid valid valid

valid tag data

data

= cache hit

tag (^) index byte offset

=

TLB hit

process ID

TLB

Cache

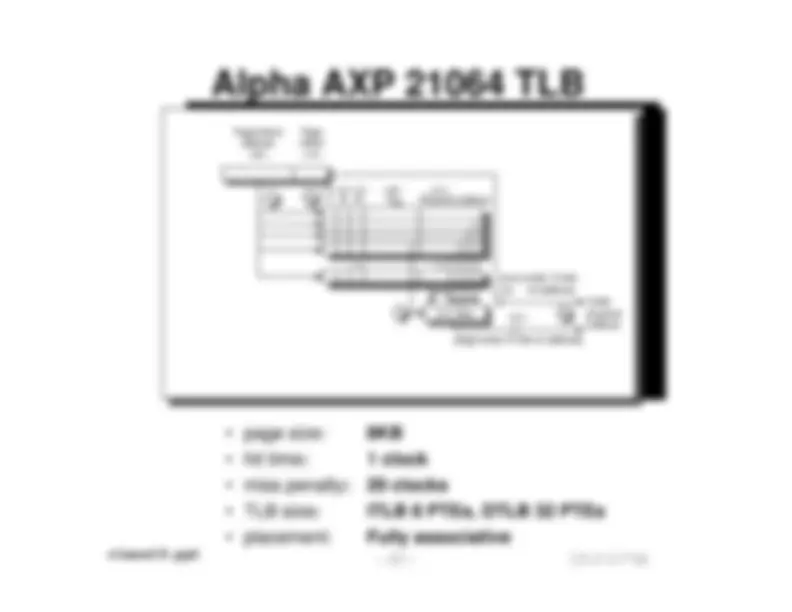

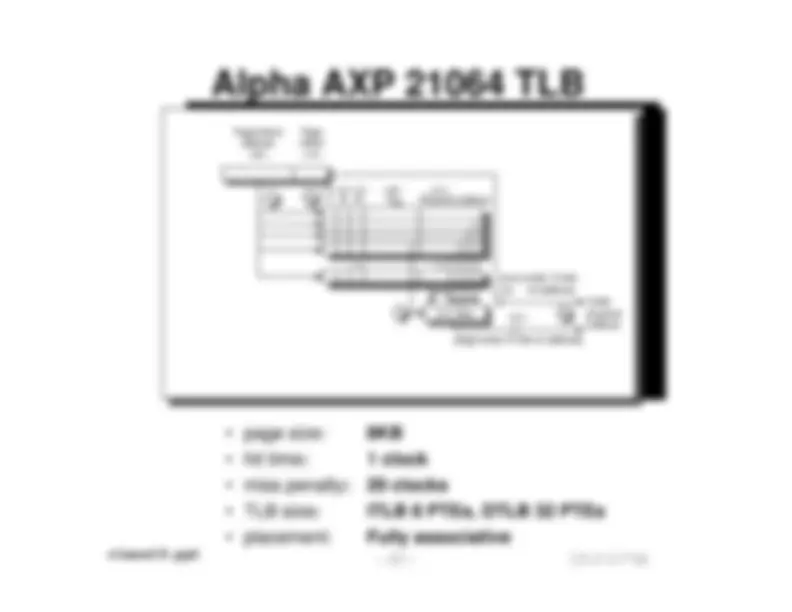

Alpha AXP 21064 TLB

- page size: 8KB

- hit time : 1 clock

- miss penalty : 20 clocks

- TLB size : ITLB 8 PTEs, DTLB 32 PTEs

- placement: Fully associative