Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

Mixture Models, Generative Models, Naive Bayes Classifier, EM Algorithm, Semi-Parametric Models, Parametric Mixtures, Mixture, Likelihood, Mixture Density Estimation, Assignment, Expected Likelihood, Gaussian Mixture, Intro to EM, Greg Shakhnarovich, Lecture Slides, Introduction to Machine Learning, Computer Science, Toyota Technological Institute at Chicago, United States of America.

Typology: Lecture notes

1 / 27

This page cannot be seen from the preview

Don't miss anything!

TTIC 31020: Introduction to Machine Learning

Instructor: Greg Shakhnarovich

TTI–Chicago

November 1, 2010

General idea: assume (pretend?) p(x | y) comes from a certain parametric class, p(x | y; θy)

Estimate ̂θy from data in each class

Under this estimate, select class with highest p(x 0 | y; ̂θy)

Example: Gaussian model

Semi-parametric models

the EM algorithm

So far, we have assumed that each class has a single coherent model.

−6−8 −6 −4 −2 0 2 4 6 8 10

−

−

0

2

4

6

8

10

Images of the same person under different conditions: with/without glasses, different expressions, different views.

Images of the same category but different sorts of objects: chairs with/without armrests.

Multiple topics within the same document.

Different ways of pronouncing the same phonemes.

Assumptions:

A mixture model:

−6^ −4 −4 −2 0 2 4 6 8

−

0

2

4

6

8

10

p(x; π) =

∑^ k

c=

p(y = c)p (x | y = c).

πc , p(y = c) are the mixing probabilities

We need to parametrize the component densities p (x | y = c).

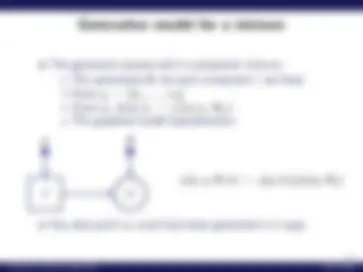

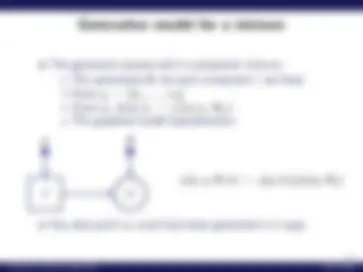

The generative process with k-component mixture:

y (^) x

π θ

p(x, y; θ, π) = p(y; π)·p(x|y; θy)

Any data point xi could have been generated in k ways.

If the c-th component is a Gaussian, p (x | y = c) = N (x; μc, Σc), then

p(x; θ, π) =

∑^ k

c=

πc · N (x; μc, Σc) ,

where θ = [μ 1 ,... , μk, Σ 1 ,... , Σk].

The graphical model

y (^) x

π μ^1 ,...,k Σ^1 ,...,k

Suppose that we do observe yi ∈ { 1 ,... , k} for each i = 1,... , N.

Let us introduce a set of binary indicator variables zi = [zi 1 ,... , zik] where

zic = 1 =

1 if yi = c, 0 otherwise.

The count of examples from c-th component:

Nc =

i=

zic.

If we know zi, the ML estimates of the Gaussian components, just like in class-conditional model, are

−6^ −4 −4 −2 0 2 4 6 8

−

0

2

4

6

8

10 y=

y=

π̂ c =

Nc N

μ̂ c =

Nc

i=

zicxi,

Σ̂ c = 1 Nc

i=

zic(xi − μ̂ c)(xi − ̂μc)T^.

The “complete data” likelihood (when z are known):

p(X, Z; π, θ) ∝

i=

∏^ k

c=

(πcN (xi; μc, Σc))zic^.

and the log:

log p(X, Z; π, θ) = const +

i=

∑^ k

c=

zic (log πc + log N (xi; μc, Σc)).

We can’t compute it, but can take the expectation w.r.t. the posterior of z, which is just γic:

Ezic∼γic [log p(xi, zic; π, θ)].

log p(X, Z; π, θ) = const +

i=

∑^ k

c=

zic (log πc + log N (xi; μc, Σc)).

Expectation of zic:

Ezic∼γic [zic] =

z∈ 0 , 1

z · γicz = γic.

The expected likelihood of the data:

Ezic∼γic [log p(X, Z; p, θ)] = const

i=

∑^ k

c=

γic (log πc + log N (xi; μc, Σc)).

If we know the parameters and indicators (assignments) we are done.

If we know the indicators but not the parameters, we can do ML estimation of the parameters – and we are done.

If we know the parameters but not the indicators, we can compute the posteriors of indicators;

But in reality we know neither the parameters nor the indicators.

Start with a guess of θ, π.

Iterate between: E-step Compute values of expected assignments, i.e. calculate γic, using current estimates of θ, π. M-step Maximize the expected likelihood, under current γic.

Repeat until convergence.