Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

Generative Models, Optimal Classification, Bayes Classifier, Bayes Risk, Discriminant Functions, Two-Category, Equal Covariance Gaussian, Gaussian Case, Linear Discriminant, Generative Models, Maximum Likelihood, Density Estimation, Unequal Covariances, Gaussians, Decision Boundaries, Quadratic Decision Boundaries, Gaussian ML, Naive Bayes Classifier, SPAM Detection, MAP Estimate, Greg Shakhnarovich, Lecture Slides, Introduction to Machine Learning, Computer Science, Toyota Technological Institu

Typology: Lecture notes

1 / 37

This page cannot be seen from the preview

Don't miss anything!

TTIC 31020: Introduction to Machine Learning

Instructor: Greg Shakhnarovich

TTI–Chicago

October 29, 2010

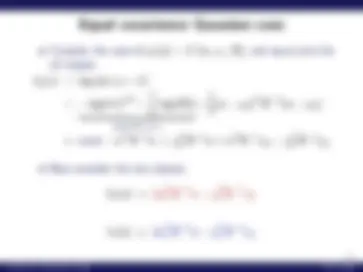

Expected classification error is minimized by

h(x) = argmax c

p (y = c | x)

p (x | y) p(y) p(x)

The Bayes classifier:

h∗(x) = argmax c

p (x | y = c) p(y = c) p(x) = argmax c

p (x | y = c) p(y = c)

= argmax c

{log pc(x) + log Pc}.

Note: p(x) is equal for all c, and can be ignored.

Expected classification error is minimized by

h(x) = argmax c

p (y = c | x)

p (x | y) p(y) p(x)

The Bayes classifier:

h∗(x) = argmax c

p (x | y = c) p(y = c) p(x) = argmax c

p (x | y = c) p(y = c)

= argmax c

{log pc(x) + log Pc}.

Note: p(x) is equal for all c, and can be ignored.

−0.2−10 −8 −6 −4 −2 0 2 4 6 8 10

−0.

0

y =2, h∗(x) =

y =1, h∗(x) =

The risk (probability of error) of Bayes classifier h∗^ is called the Bayes risk R∗. This is the minimal achievable risk for the given p(x, y) with any classifier! In a sense, R∗^ measures the inherent difficulty of the classification problem.

x

max c {p (x | c = y) Pc} dx

In case of two classes y ∈ {± 1 }, the Bayes classifier is

h∗(x) = argmax c=± 1

δc(x) = sign (δ+1(x) − δ− 1 (x)).

Decision boundary is given by δ+1(x) − δ− 1 (x) = 0.

With equal priors, this is equivalent to the (log)-likelihood ratio test:

h∗(x) = sign

log p (x | y = +1) p (x | y = −1)

Consider the case of pc(x) = N (x; μc, Σ), and equal prior for all classes.

δk(x) = log p(x | y = k)

= − log(2π)d/^2 −

log(|Σ|) ︸ ︷︷ ︸ same for all k

(x − μk)T^ Σ−^1 (x − μk)

Consider the case of pc(x) = N (x; μc, Σ), and equal prior for all classes.

δk(x) = log p(x | y = k)

= − log(2π)d/^2 −

log(|Σ|) ︸ ︷︷ ︸ same for all k

(x − μk)T^ Σ−^1 (x − μk)

∝ const − xT^ Σ−^1 x + μTk Σ−^1 x + xT^ Σ−^1 μk − μTk Σ−^1 μk

Now consider the two classes:

δk(x) ∝ 2 μTk Σ−^1 x − μTk Σ−^1 μk

δc(x) ∝ 2 μTq Σ−^1 x − μTq Σ−^1 μq

Two class discriminants:

δk(x) − δq(x) = μTk Σ−^1 x − xT^ Σ−^1 μk + μTk Σ−^1 μk − μTq Σ−^1 x − xT^ Σ−^1 μq + μTq Σ−^1 μq

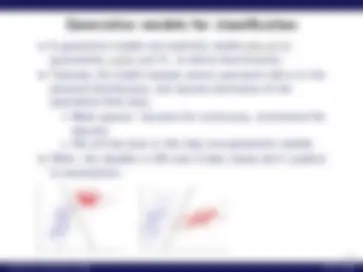

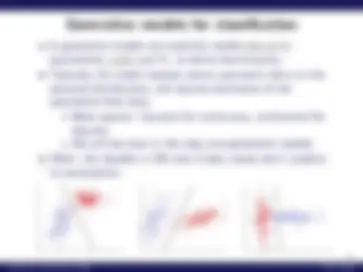

In generative models one explicitly models p(x, y) or, equivalently, pc(x) and Pc, to derive discriminants. Typically, the model imposes certain parametric form on the assumed distributions, and requires estimation of the parameters from data.

−12−10 −5 0 5 10 15 −10^ −

− −4^ −

02

46

8

−2−6 −4 −2 0 2 4 6 8

0

2

4

6

8

10

In generative models one explicitly models p(x, y) or, equivalently, pc(x) and Pc, to derive discriminants. Typically, the model imposes certain parametric form on the assumed distributions, and requires estimation of the parameters from data.

−12−10 −5 0 5 10 15 −10^ −

− −4^ −

02

46

8

−2−6 −4 −2 0 2 4 6 8

0

2

4

6

8

10

−4 −6 −4 −2 0 2 4 6 8 10 12

−

0

2 4

6

8

10

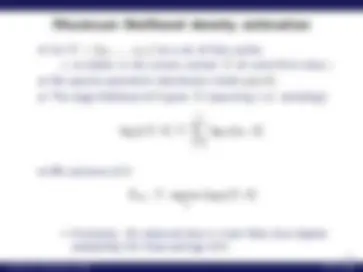

What if we remove the restriction that ∀c, Σc = Σ?

Compute ML estimate for μc, Σc for each c.

We get discriminants (and decision boundaries) quadratic in x:

δc(x) = −

xT^ Σ− c 1 x + μTc Σ− c 1 x − 〈const in x〉

(as shown in PS1).

A quadratic form in x: xT^ Ax.

What do quadratic boundaries look like in 2D?

Second-degree curves can be any conic section:

What do quadratic boundaries look like in 2D?

Second-degree curves can be any conic section:

Can all of these arise from two Gaussian classes?

Reminder: three sources of error: noise variance (irreducible), structural due to our model class, estimation due to our choice of model from that class.

In generative model, estimation error may be due to overfitting.