Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

A part of the course materials for discrete mathematics (mat 2345) taught by dr. N. Van cleave in fall 2009. It covers the topics of complexity of algorithms, the integers and division, and primes and greatest common divisors. The concepts of time complexity and space complexity, analyzing time complexity, and search algorithms based on the number of comparisons. It also discusses divisibility, the distribution of prime numbers, and relatively prime integer pairs.

Typology: Study notes

1 / 27

This page cannot be seen from the preview

Don't miss anything!

Dr. N. Van Cleave

Fall 2009

I (^) Reading: Textbook, Section 3.3–3. I (^) Assignments: Sec 3.3 Due Wed: 2, 4 (justify efficiency ans), 7, 8 Sec 3.4 Due Thur: 4, 8, 9a–d, 12, 26, 35 Sec 3.5 Due Mon: 4, 5, 10, 13, 14a, 18, 21a–c, 26 I (^) Attendance: Strongly Encouraged

I (^) 3.3 Complexity of Algorithms I (^) 3.4 The Integers and Division I (^) 3.5 Primes and Greatest Common Divisors

II (^) It is obviously important to know whether an algorithm will produce an answer in milliseconds or time measured in years.

I (^) Time complexity can be described in terms of the number of operations required instead of actual computer time because of the difference in time needed for different computers to perform basic operations.

I (^) It would be quite complicated to break down all operations to the basic bit operations that a computer uses

I (^) Various machines, from personal computers to supercomputers, perform basic bit operations at rates which differ by as much as 1,000 times or more

I (^) It is obviously important to determine whether an algorithm will require more memory than we have available

I (^) Space complexity can be described in terms of the amount of memory necessary to store one element × the size of input, plus additional storage required by the algorithm, and is often given in terms of the size of input and its storage requirements

I (^) Considerations of space complexity are tied to the particular data structures used to implement the algorithm

based on the number of comparisons made:

I (^) Linear Search

I (^) Binary Search (for simplicity, assume there are n = 2 k^ elements in the input list)

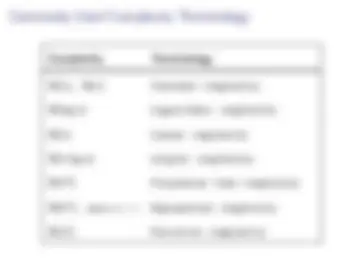

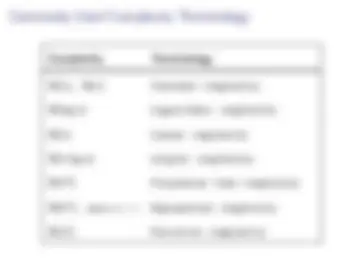

Complexity Terminology

Θ(1), Θ(c) Constant complexity

Θ(log n) Logarithmic complexity

Θ(n) Linear complexity

Θ(n log n) nlog(n) complexity

Θ(nb) Polynomial time complexity

Θ(bn), where b > 1 Exponential complexity

Θ(n!) Factorial complexity

I (^) A solvable problem is called tractable if there exists an algorithm with polynomial worst–case complexity to solve it.

I (^) Even if a problem is tractable, there’s no guarantee it can be solved in a reasonable amount of time for even relatively small input values.

I (^) Most algorithms in use have polynomial complexities of degree 4 or less.

I (^) Solvable algorithms with worst–case time complexities that exceed polynomial times are called intractable

I (^) Usually, but not always, an extremely large amount of time is required to solve the problem for the worst cases of even small input values.

I (^) In a few instances, an exponential or worse algorithm may be able to solve problems of reasonable size in sufficient time to be useful

Another important class of problems, called NP–Complete problems, are problems in the class NP which have the property that:

If any of the problems in the NP–Complete class can be solved in polynomial time, then all of them can be

No one has been able to find such an algorithm.

It is suspected that no one ever will.

I (^) Integers and their properties belong to a branch of Mathematics called Number Theory

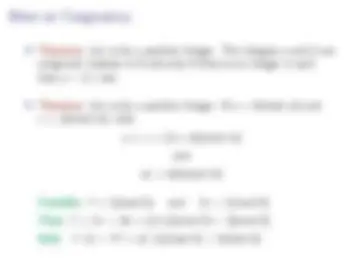

I (^) If a and b are integers, with a 6 = 0, we say that a divides b if there is an integer c such that b = ac.

Notation: a | b, a divides b a is a factor of b; b is a multiple of a a 6 | b, a does not divide b

I (^) Theorem. The “Division Algorithm”. Let a be an integer, d be a positive integer. Then there are unique integers q and r, with 0 ≤ r < d, such that a = dq + r.

I (^) In the equality given in the division algorithm:

d is called the divisor, a is called the dividend, q is called the quotient, and r is called the remainder

I (^) Let a be an integer and m be a positive integer. We denote by (a mod m) the remainder when a is divided by m.

From this definition, it follows that: if (a mod m) = r , then a = qm + r and 0 ≤ r < m.

I (^) If a and b are integers, and m is a positive integer, then a is congruent to b modulo m if m divides a − b. This is denoted by: a ≡ b(mod m)

Note: a mod m and b mod m will yield the same remainder.

Consider: (17 − 5) mod 6 = 12 mod 6 = 0 Also: 17 mod 6 = 5 and 5 mod 6 = 5 Thus: 17 ≡ 5 (mod 6)

I (^) Hashing Functions — assign memory locations to values, records (keys), or computer files for easy retrieval

I (^) Pseudorandom Numbers — systematically generate a sequence of numbers that have properties of randomly chosen numbers

I (^) Cryptology — encryption, to make a message secret; decryption, to determine the original message

I (^) A positive integer p > 1 is called prime if the only positive factors of p are 1 and p.

I (^) A positive integer that is greater than 1 and is not prime is called composite.

I (^) The Fundamental Theorem of Arithmetic. Every positive integer can be written uniquely as the product of primes, where the prime factors are written in order of non–decreasing size.