Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

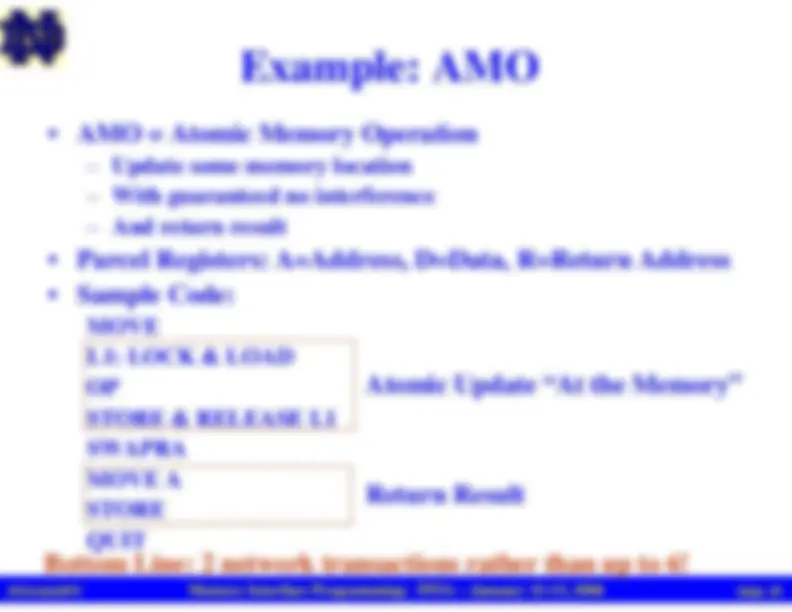

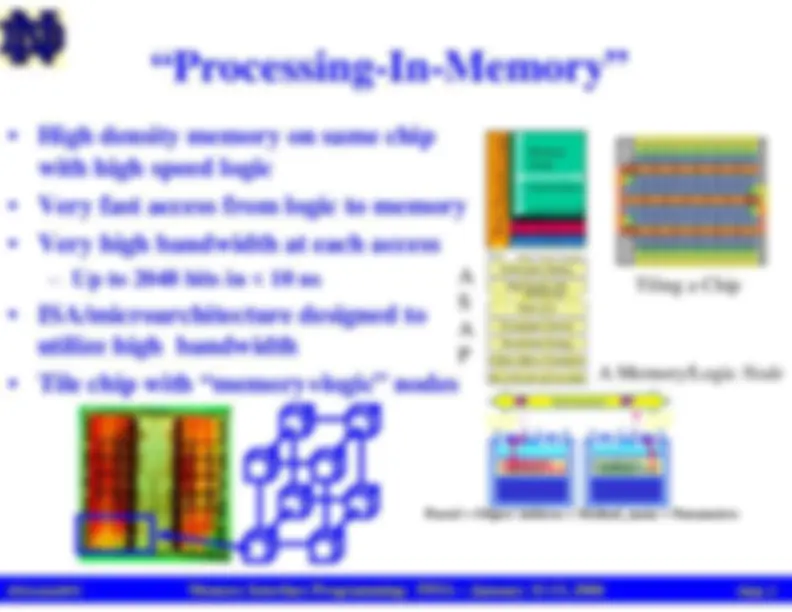

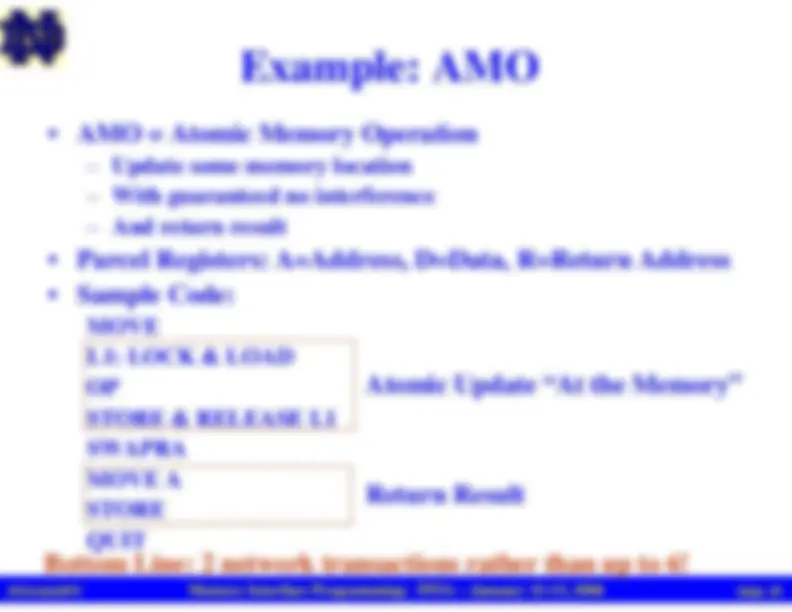

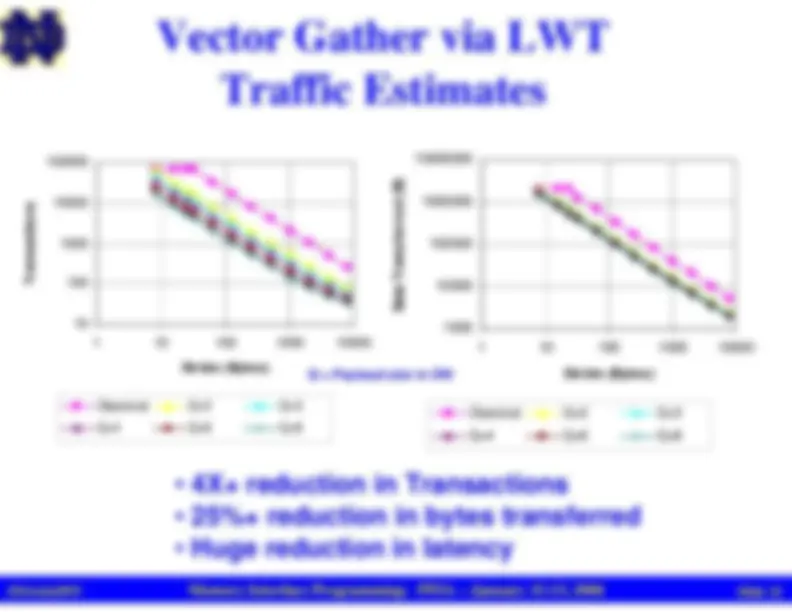

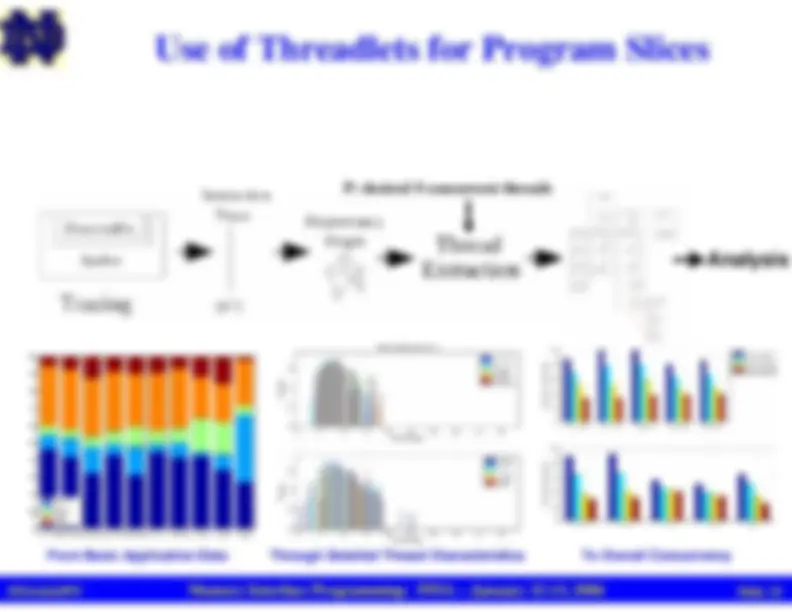

The concept of making memory interfaces programmable to reduce latency and bandwidth issues in conventional systems, particularly for shared memory systems. The presentation, given by peter m. Kogge at iwia 2004, introduces the idea of threadlets, which are traveling threads that process data in memory, and piglet, a new isa for governing these threadlets. The document also covers assumptions, processing-in-memory, and performance improvements.

Typology: Lab Reports

1 / 25

This page cannot be seen from the preview

Don't miss anything!

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

Slide 5

IWIA04.PPT

Interconnect

incoming

parcels

outgoing

parcels

Parcel = Object Address + Method_name + Parameters

Performance Monitor

Wide Register File

Wide ALUs

Permutation NetworkThread State Package

Global Address Translation Parcel Decode and Assembly

Broadcast Bus

Row Decode Logic

Sense Amplifiers/LatchesColumn Multiplexing

MemoryArray 1 “Full Word”/Row 1 Column/Full Word

Bit

“Wide Word” Interface

Address

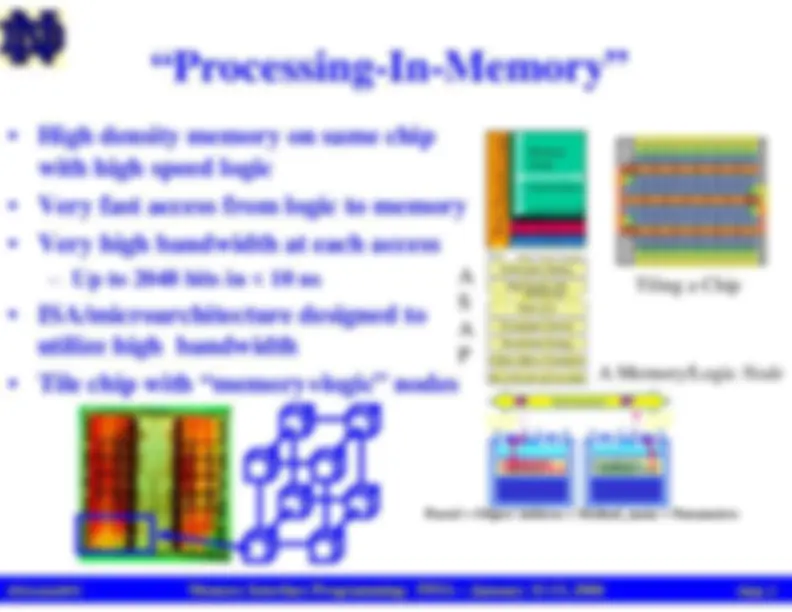

A Memory/Logic

Node

Tiling a Chip

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

“Looks like memory” at Interfaces

ISA: 16-bit multithreaded/SIMD

“Thread” = IP/FP pair

“Registers” = wide words in frames

Multiple nodes per chip

1 node logic area ~ 10.3 KB SRAM(comparable to MIPS R3000)

TSMC 0.18u 1-node in fab now

3.2 million transistors (4-node)

Thread Queue

Frame

Memory

Instr

Memory

ALU

Data

Memory

Write-

Back Logic

Parcel in (via chip data bus)

Parcel out (via chip data bus)

memory interconnect network

Memory interconnect network

Memory

CPU

PIM

memory interconnect network

Memory interconnect network

Memory

CPU

PIM

Memory Interface Programming: IWIA – January 12-13, 2004

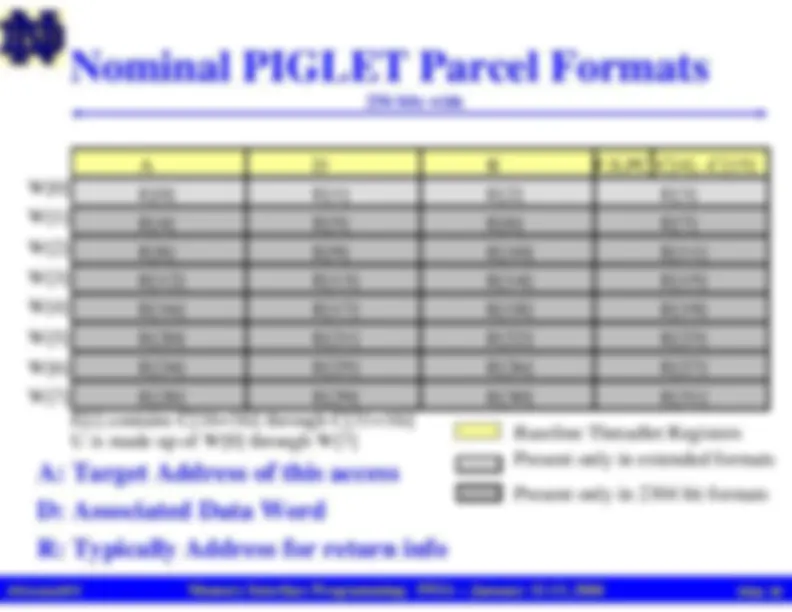

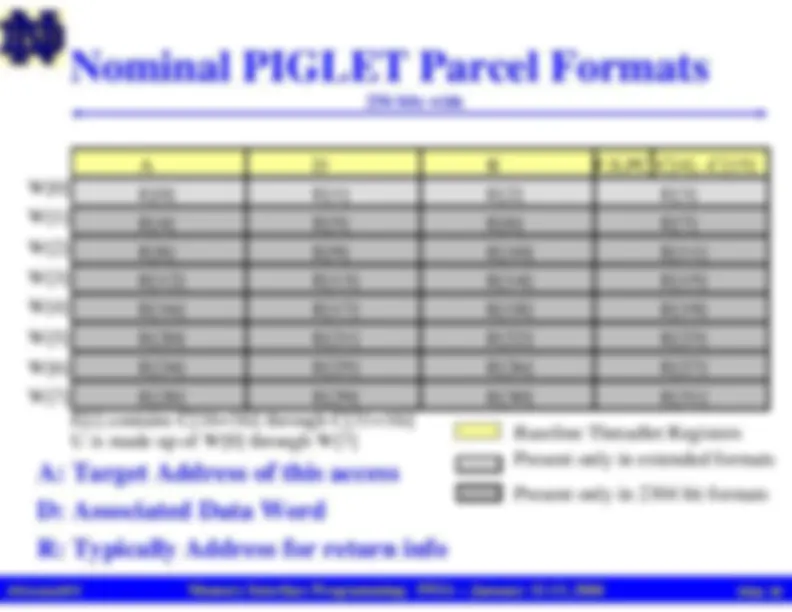

E[0]

E[1]

E[2]

E[3]

A

D

R

F,S,PC C[4]...C[15]

E[4]

E[5]

E[6]

E[7]

E[8]

E[9]

E[10]

E[11]

E[12]

E[13]

E[14]

E[15]

E[16]

E[17]

E[18]

E[19]

E[20]

E[21]

E[22]

E[23]

E[24]

E[25]

E[26]

E[27]

E[28]

E[29]

E[30]

E[31]

W[0]W[1]W[2]W[3]W[4]W[5]W[6]W[7]

E[i] contains C[16+16i] through C[31+16i]U is made up of W[0] through W[7]

Present only in extended formatsPresent only in 2304 bit formats

Baseline Threadlet Registers

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

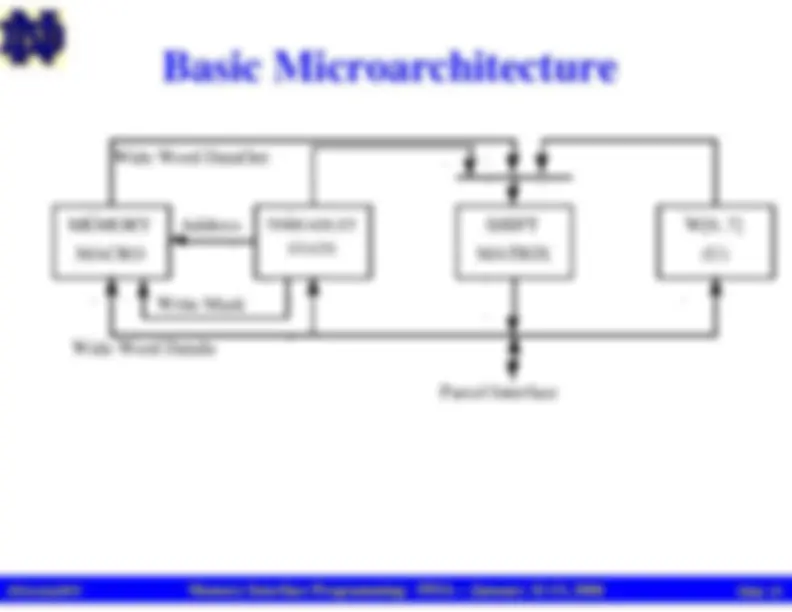

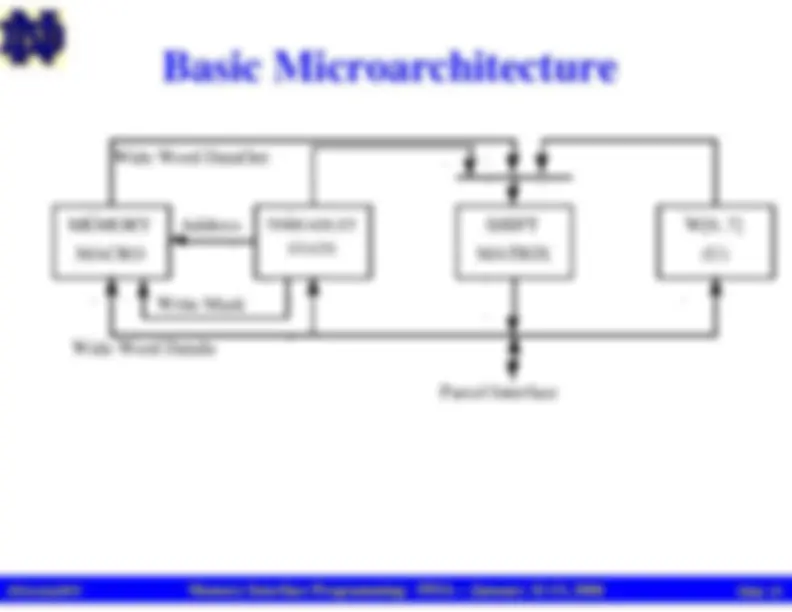

MEMORY

MACRO

SHIFT

MATRIX

W[0..7]

(U)

Wide Word DataOut

Wide Word DataIn

Write Mask

Address

Parcel Interface

Memory Interface Programming: IWIA – January 12-13, 2004

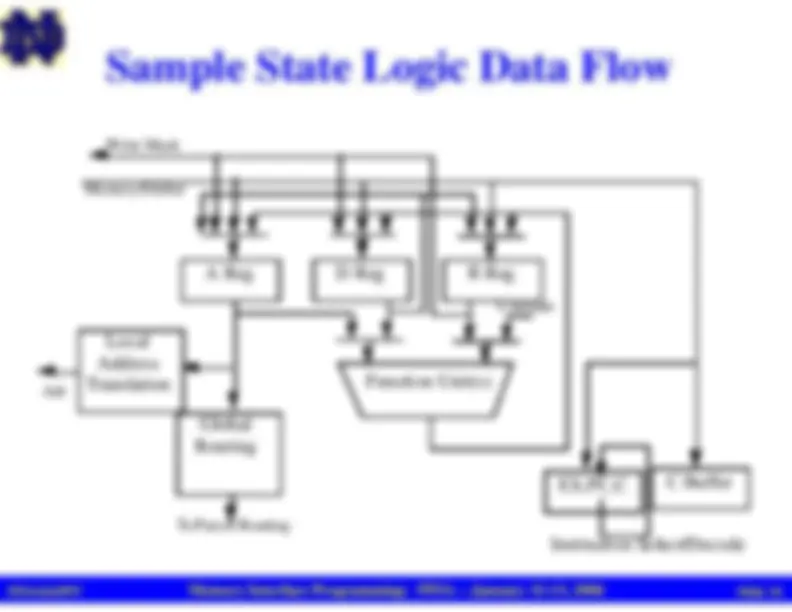

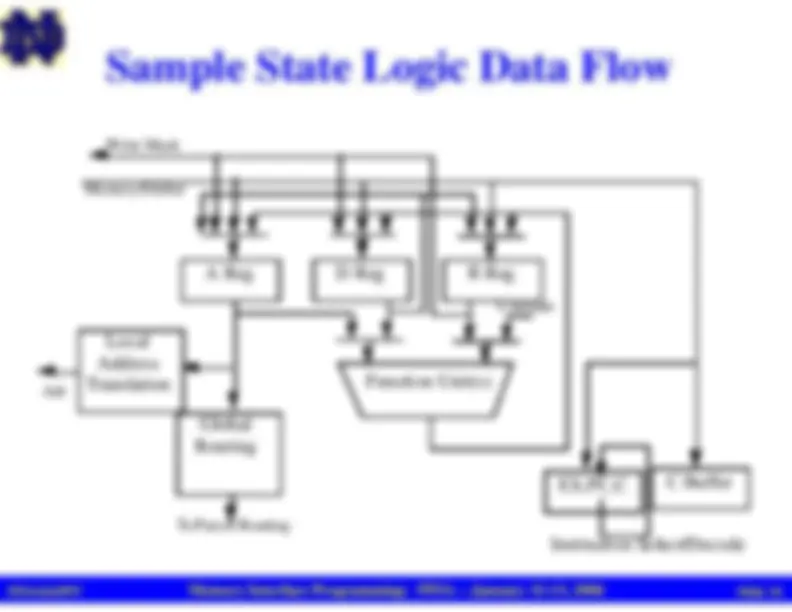

A Reg

D Reg

R Reg

Local

Address

Translation

Global

Routing

Function Unit(s)

C Buffer

F,S,PC,C

Instruction Select/Decode

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

Memory Interface Programming: IWIA – January 12-13, 2004

IWIA04.PPT

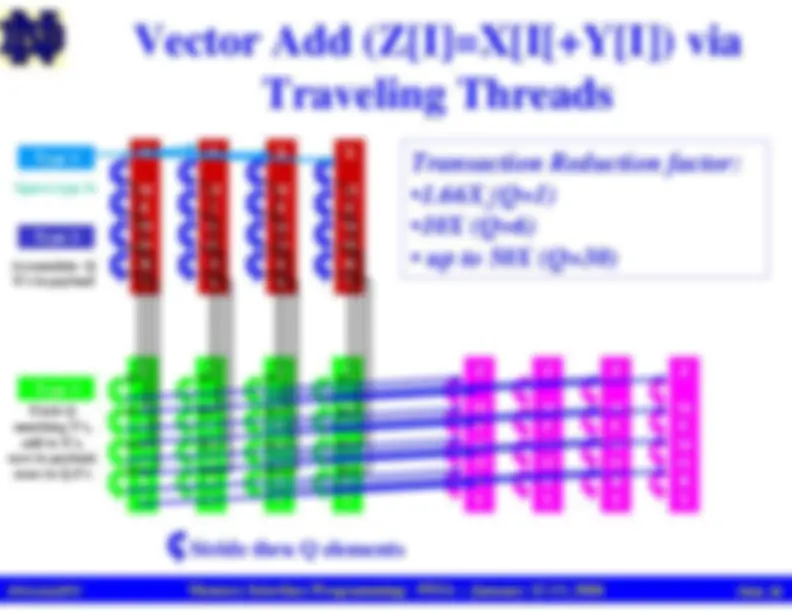

Type 1

Type 2 Type 3

Accumulate Q

X’s in payload

Spawn type 2s

Fetch Q

matching Y’s,

add to X’s,

save in payload,

store in Q Z’s